Aggregation

There are three types of aggregate functions available in RasterFrames: tile aggregate, DataFrame aggregate, and element-wise local aggregate. In the tile aggregate functions, we are computing a statistical summary per row of a tile column in a DataFrame. In the DataFrame aggregate functions, we are computing statistical summaries over all of the cell values and across all of the rows in the DataFrame or group. In the element-wise local aggregate functions, we are computing the element-wise statistical summary across a DataFrame or group of tiles. In the latter two cases, when vector data is the grouping column, the results are zonal statistics.

Tile Mean Example

We can illustrate aggregate differences by computing an aggregate mean. First, we create a sample DataFrame of 2 tiles. The tiles will contain normally distributed cell values with the first row’s mean at 1.0 and the second row’s mean at 3.0. For details on use of the Tile class see the page on numpy interoperability.

from pyrasterframes.rf_types import Tile, CellType

t1 = Tile(1 + 0.1 * np.random.randn(5,5), CellType('float64raw'))

t1.cells # display the array in the Tile

array([[1.029, 0.901, 0.897, 1.002, 0.932],

[1.013, 0.915, 0.968, 1.035, 1.072],

[0.882, 1.089, 0.845, 0.918, 0.943],

[1.019, 1.088, 1.095, 0.872, 1.064],

[0.902, 0.937, 1.106, 0.993, 0.93 ]])

t5 = Tile(5 + 0.1 * np.random.randn(5,5), CellType('float64raw'))

t5.cells

array([[4.935, 4.815, 5.069, 4.968, 5.165],

[4.976, 4.883, 4.881, 5.074, 5.065],

[5.146, 5.136, 4.943, 5.14 , 5.021],

[4.918, 5.026, 5.213, 4.918, 4.719],

[5.027, 4.953, 4.947, 5.042, 5.039]])

Create a Spark DataFrame from the Tile objects.

import pyspark.sql.functions as F

from pyspark.sql import Row

rf = spark.createDataFrame([

Row(id=1, tile=t1),

Row(id=2, tile=t5)

]).orderBy('id')

We use the rf_tile_mean function to compute the tile aggregate mean of cells in each row of column tile. The mean of each tile is computed separately, so the first mean is about 1.0 and the second mean is about 3.0. Notice that the number of rows in the DataFrame is the same before and after the aggregation.

rf.select(F.col('id'), rf_tile_mean(F.col('tile')))

| id | rf_tile_mean(tile) |

|---|---|

| 1 | 0.9777736017127512 |

| 2 | 5.000752105081167 |

We use the rf_agg_mean function to compute the DataFrame aggregate, which averages values across the fifty cells in two rows. Note that only a single row is returned since the average is computed over the full DataFrame.

rf.agg(rf_agg_mean(F.col('tile')))

| rf_agg_mean(tile) |

|---|

| 2.9892628533969594 |

We use the rf_agg_local_mean function to compute the element-wise local aggregate mean across the two rows. For this aggregation, we are computing the mean of one value of 1.0 and one value of 3.0 to arrive at the element-wise mean, but doing so twenty-five times, one for each position in the tile.

To compute an element-wise local aggregate, tiles need to have the same dimensions. In this case, both tiles have 5 rows and 5 columns. If we tried to compute an element-wise local aggregate over the DataFrame without equal tile dimensions, we would get a runtime error.

rf.agg(rf_agg_local_mean('tile')) \

.first()[0].cells.data # display the contents of the Tile array

array([[2.982, 2.858, 2.983, 2.985, 3.049],

[2.994, 2.899, 2.925, 3.054, 3.068],

[3.014, 3.113, 2.894, 3.029, 2.982],

[2.969, 3.057, 3.154, 2.895, 2.892],

[2.964, 2.945, 3.026, 3.017, 2.984]])

Cell Counts Example

We can also count the total number of data and NoData cells over all the tiles in a DataFrame using rf_agg_data_cells and rf_agg_no_data_cells. There are ~3.8 million data cells and ~1.9 million NoData cells in this DataFrame. See the section on “NoData” handling for additional discussion on handling missing data.

rf = spark.read.raster('https://rasterframes.s3.amazonaws.com/samples/MCD43A4.006/11/05/2018233/MCD43A4.A2018233.h11v05.006.2018242035530_B02.TIF')

stats = rf.agg(rf_agg_data_cells('proj_raster'), rf_agg_no_data_cells('proj_raster'))

stats

| rf_agg_data_cells(proj_raster) | rf_agg_no_data_cells(proj_raster) |

|---|---|

| 3825959 | 1934041 |

Statistical Summaries

The statistical summary functions return a summary of cell values: number of data cells, number of NoData cells, minimum, maximum, mean, and variance, which can be computed as a tile aggregate, a DataFrame aggregate, or an element-wise local aggregate.

The rf_tile_stats function computes summary statistics separately for each row in a tile column as shown below.

rf = spark.read.raster('https://rasterframes.s3.amazonaws.com/samples/luray_snp/B02.tif')

stats = rf.select(rf_tile_stats('proj_raster').alias('stats'))

stats.printSchema()

root

|-- stats: struct (nullable = true)

| |-- data_cells: long (nullable = false)

| |-- no_data_cells: long (nullable = false)

| |-- min: double (nullable = false)

| |-- max: double (nullable = false)

| |-- mean: double (nullable = false)

| |-- variance: double (nullable = false)

stats.select('stats.min', 'stats.max', 'stats.mean', 'stats.variance')

Showing only top 5 rows.

| min | max | mean | variance |

|---|---|---|---|

| 239.0 | 2047.0 | 359.02950246710566 | 23843.848327795215 |

| 161.0 | 3811.0 | 300.751678466797 | 19873.49985985365 |

| 215.0 | 10201.0 | 1242.8558654785195 | 2931419.376503068 |

| 253.0 | 990.0 | 338.8358725761777 | 9432.898962465755 |

| 175.0 | 11004.0 | 984.6691741943379 | 1987081.8477228696 |

The rf_agg_stats function aggregates over all of the tiles in a DataFrame and returns a statistical summary of all cell values as shown below.

stats = rf.agg(rf_agg_stats('proj_raster').alias('stats')) \

.select('stats.min', 'stats.max', 'stats.mean', 'stats.variance')

stats

| min | max | mean | variance |

|---|---|---|---|

| 3.0 | 12103.0 | 542.1327946489893 | 685615.201702677 |

The rf_agg_local_stats function computes the element-wise local aggregate statistical summary as shown below. The DataFrame used in the previous two code blocks has unequal tile dimensions, so a different DataFrame is used in this code block to avoid a runtime error.

rf = spark.createDataFrame([

Row(id=1, tile=t1),

Row(id=3, tile=t1 * 3),

Row(id=5, tile=t1 * 5)

]).agg(rf_agg_local_stats('tile').alias('stats'))

agg_local_stats = rf.select('stats.min', 'stats.max', 'stats.mean', 'stats.variance').collect()

for r in agg_local_stats:

for stat in r.asDict():

print(stat, ':\n', r[stat], '\n')

min :

Tile(dimensions=[5, 5], cell_type=CellType(float64, nan), cells=

[[1.028512949249102 0.9006189997532675 0.8971728835747308

1.002068356214286 0.9321462409230148]

[1.0126667891814458 0.9148072259708329 0.9684115387004245

1.0347924734353402 1.0716593316892458]

[0.8817176739676914 1.0890033858198382 0.8448466821569003

0.918312790631051 0.9428310923100086]

[1.0189912660713847 1.0876366426868298 1.0946450901390512

0.8723867769755788 1.0641414795651045]

[0.9017652499597776 0.9373817930893357 1.1057028212801712

0.9925594019717625 0.9295611075026057]])

max :

Tile(dimensions=[5, 5], cell_type=CellType(float64, nan), cells=

[[5.14256474624551 4.503094998766338 4.485864417873654 5.01034178107143

4.6607312046150735]

[5.063333945907229 4.574036129854164 4.842057693502123 5.173962367176701

5.358296658446228]

[4.408588369838457 5.445016929099191 4.224233410784501 4.591563953155255

4.714155461550043]

[5.094956330356924 5.438183213434149 5.4732254506952565

4.361933884877894 5.320707397825522]

[4.508826249798888 4.686908965446678 5.528514106400856 4.962797009858813

4.647805537513029]])

mean :

Tile(dimensions=[5, 5], cell_type=CellType(float64, nan), cells=

[[3.0855388477473062 2.7018569992598027 2.6915186507241926

3.006205068642858 2.7964387227690444]

[3.038000367544337 2.7444216779124986 2.9052346161012736

3.1043774203060206 3.2149779950677373]

[2.645153021903074 3.2670101574595147 2.534540046470701

2.7549383718931533 2.828493276930026]

[3.056973798214154 3.2629099280604894 3.2839352704171536

2.6171603309267364 3.1924244386953133]

[2.705295749879333 2.812145379268007 3.3171084638405133

2.9776782059152875 2.788683322507817]])

variance :

Tile(dimensions=[5, 5], cell_type=CellType(float64, nan), cells=

[[2.820903698061562 2.1629722205775375 2.146451154724792

2.6777093080693373 2.317057638578418]

[2.734650735762827 2.2316593618358693 2.5008557554349977

2.8554545682091454 3.062543261857707]

[2.0731361508986543 3.1624756648721917 1.9033757769373931

2.2487956838309 2.3704812496706236]

[2.76891520087937 3.154542577373542 3.1953276623080864 2.029489836378236

3.0197255694160265]

[2.168481509426721 2.343159002707674 3.2602099439651457

2.6271311105134476 2.3042236068839212]])

Histogram

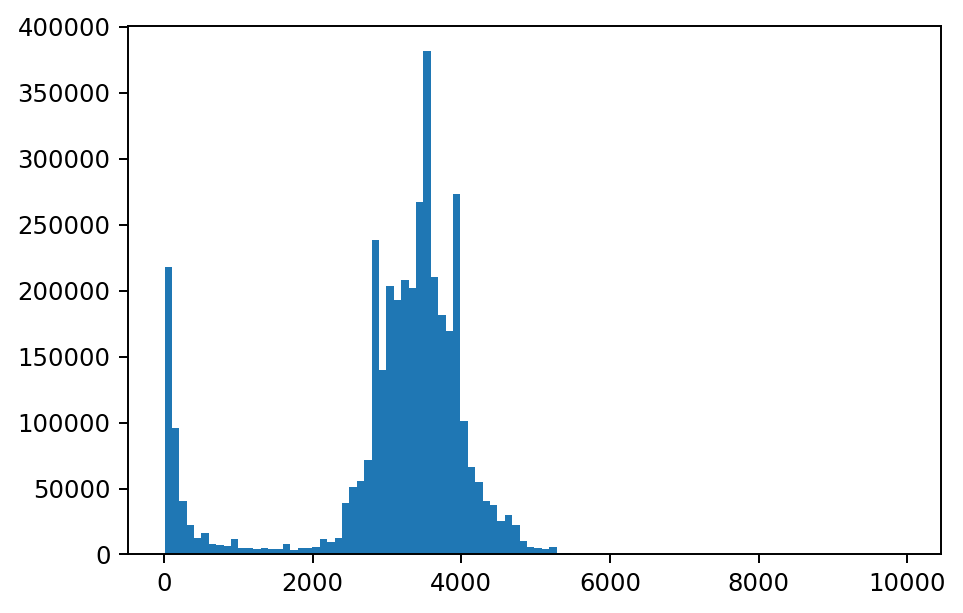

The rf_tile_histogram function computes a count of cell values within each row of tile and outputs a bins array with the schema below. In the graph below, we have plotted each bin’s value on the x-axis and count on the y-axis for the tile in the first row of the DataFrame.

import matplotlib.pyplot as plt

rf = spark.read.raster('https://rasterframes.s3.amazonaws.com/samples/MCD43A4.006/11/05/2018233/MCD43A4.A2018233.h11v05.006.2018242035530_B02.TIF')

hist_df = rf.select(rf_tile_histogram('proj_raster')['bins'].alias('bins'))

hist_df.printSchema()

bins_row = hist_df.first()

values = [int(bin['value']) for bin in bins_row.bins]

counts = [int(bin['count']) for bin in bins_row.bins]

plt.hist(values, weights=counts, bins=100)

plt.show()

root

|-- bins: array (nullable = true)

| |-- element: struct (containsNull = true)

| | |-- value: double (nullable = false)

| | |-- count: long (nullable = false)

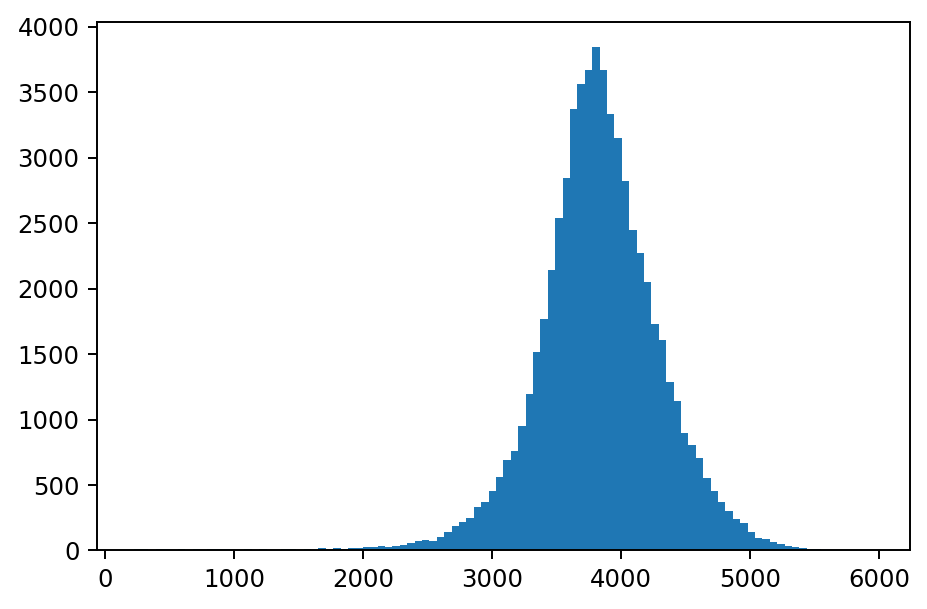

The rf_agg_approx_histogram function computes a count of cell values across all of the rows of tile in a DataFrame or group. In the example below, the range of the y-axis is significantly wider than the range of the y-axis on the previous histogram since this histogram was computed for all cell values in the DataFrame.

bins_list = rf.agg(

rf_agg_approx_histogram('proj_raster')['bins'].alias('bins')

).collect()

values = [int(row['value']) for row in bins_list[0].bins]

counts = [int(row['count']) for row in bins_list[0].bins]

plt.hist(values, weights=counts, bins=100)

plt.show()